The 5 Layers of AI: How the World is Being Rebuilt from the Power Grid Up

Khalil Adib

Senior AI Engineer

As 2025 comes to a close, the world of AI looks nothing like it did just a few years ago. I remember back in 2021 or 2022, when I'd tell people I was studying AI, they'd give me a blank stare or a confused look, asking, "Wait, what exactly is AI?"

Fast forward to today, and AI is everywhere. It's no longer just a "futuristic" concept; it's the engine behind your favourite online store's recommendations, the Face ID that unlocks your phone, and the autocorrect that (mostly) saves your typos. But while the public sees a simple chatbot interface, the reality "under the hood" is a massive industrial race involving trillions of dollars, national security, and global energy grids.

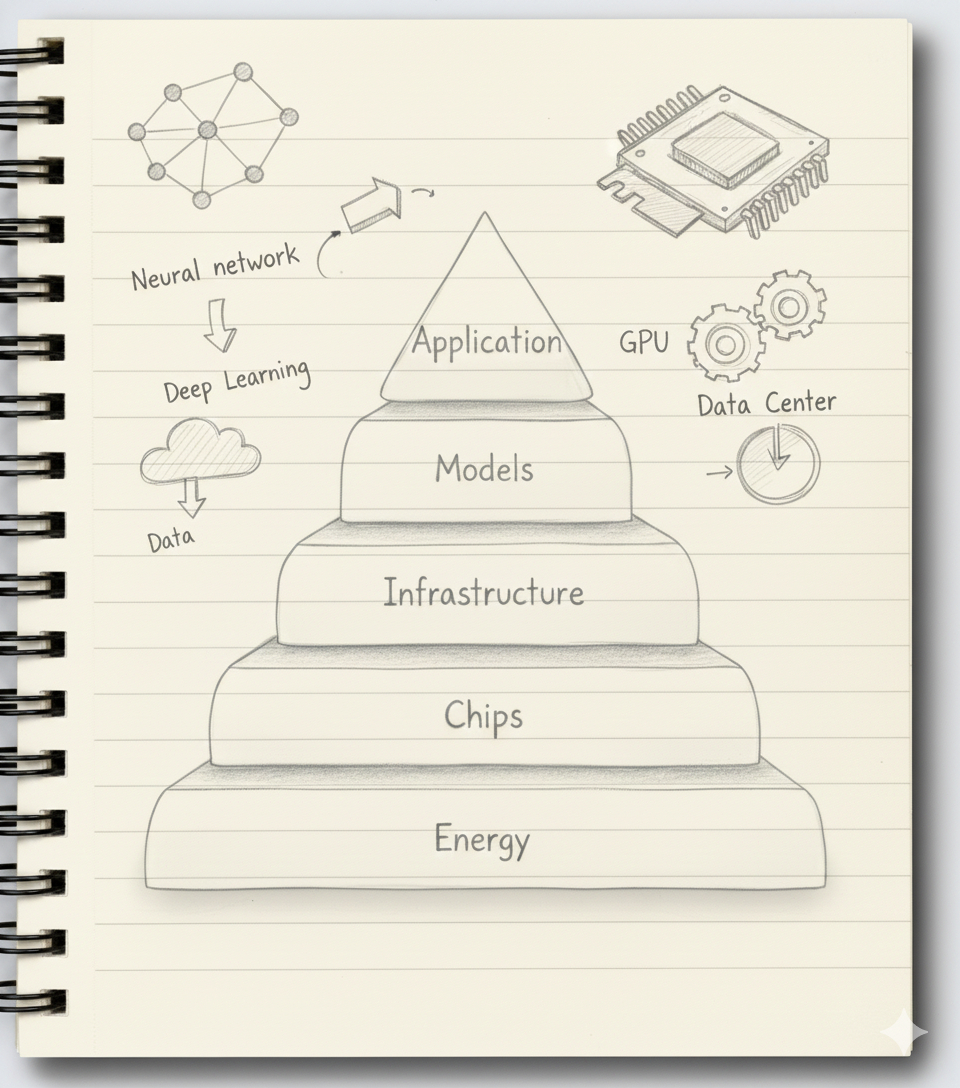

In a now-famous breakdown, Jensen Huang, the CEO of NVIDIA (now a $4.6 trillion giant), identified the 5 Layers of AI. Here is how they are shaping the world as we enter 2026.

1. Energy: The Invisible Backbone

Everything in AI eventually boils down to electricity. When we say a model runs "in the Cloud," we really mean it's running on a high-powered computer in a massive warehouse—and those computers are hungry.

The growth is staggering. By 2030, global data centres are projected to consume nearly 1,000 Terawatt-hours (TWh) of electricity—equivalent to the total energy consumption of Japan.

Projected 2030 Energy Consumption (TWh)

| Rank | Country / Entity | Projected Consumption | Context |

|---|---|---|---|

| 1 | China | ~10,000+ TWh | Global manufacturing leader |

| 2 | United States | ~4,500 TWh | Highly electrified economy |

| 3 | Global Data Centres | ~945 – 1,000 TWh | Equivalent to Japan (~950 TWh) |

| 4 | Russia | ~850 TWh | Heavy industrial focus |

To keep up, we are seeing a shift toward "Space-Based Data Centres." Startups like Starcloud and projects like Google's Suncatcher are exploring putting servers into orbit. Why? Because the sun provides 24/7 unfiltered energy, and the vacuum of space solves the massive cooling problems we face on Earth.

2. Chips: The New "Digital Gold"

If energy is the fuel, chips are the engine. We've all seen the news about NVIDIA. As of late 2025, NVIDIA is worth over $4.6 Trillion, and its stock—which traded at around $30 before the ChatGPT boom—now sits near $190 per share.

AI models rely on GPUs (Graphics Processing Units) because they can handle "matrix multiplications" in parallel, something a standard CPU struggles with. While NVIDIA dominates, the "Chip Wars" are heating up:

- Custom Silicon: Google has its TPUs, Amazon has Trainium, and Microsoft has its Maia chips.

- Specialised Players: Companies like Groq are winning by focusing specifically on inference (running the model) rather than training it.

3. Infrastructure: The Modern Pyramids

If you think about it, we are currently witnessing the largest construction project in human history. To make these models work, we've moved past the idea of just "a few servers." We are now building what Jensen Huang calls AI Factories.

I look at projects like Stargate—the massive joint venture between Microsoft and OpenAI—and the scale is almost hard to wrap your head around. We're talking about an investment that could reach $500 billion. To put that in perspective, that's more than the cost of the International Space Station. These aren't just buildings; they are "Gigawatt-scale" clusters.

But there's a catch: these machines get incredibly hot. I mentioned the energy crisis, but the heat is just as big of a problem. This is why the story is getting wilder—some companies are actually looking at the stars. They're researching putting data centres into Earth's orbit. Up there, you have two things you can't get easily on Earth: unlimited solar power and a natural "deep freeze" environment. It sounds like science fiction, but when you're spending billions just to cool a room in a desert, the vacuum of space starts to look like a logical business move.

4. Models: The "Trust Me, Bro" Economy

This brings us to the "Big Dogs"—the OpenAI's, the Anthropic's, and the Google's of the world. This is where the story gets a bit mysterious.

These companies are playing a high-stakes game of poker. They are spending billions of dollars on "training runs" that can take up to a year to complete. Imagine spending $5 billion and waiting six months just to see if your new model is actually smarter than the last one! It's a massive gamble.

When I look at their finances, the gap is hilarious. OpenAI has committed to spending roughly $1.4 trillion over the next decade, yet their annual revenue is a "tiny" $4-5 billion. How do they keep going? It's the "Trust Me, Bro" economy. Investors are betting that the first company to reach AGI (Artificial General Intelligence) will basically hold the keys to the future. We've moved from models that just "chat" to models that reason. They don't just guess the next word anymore; they "think" through problems, which is why they are becoming so much more capable—and so much more expensive.

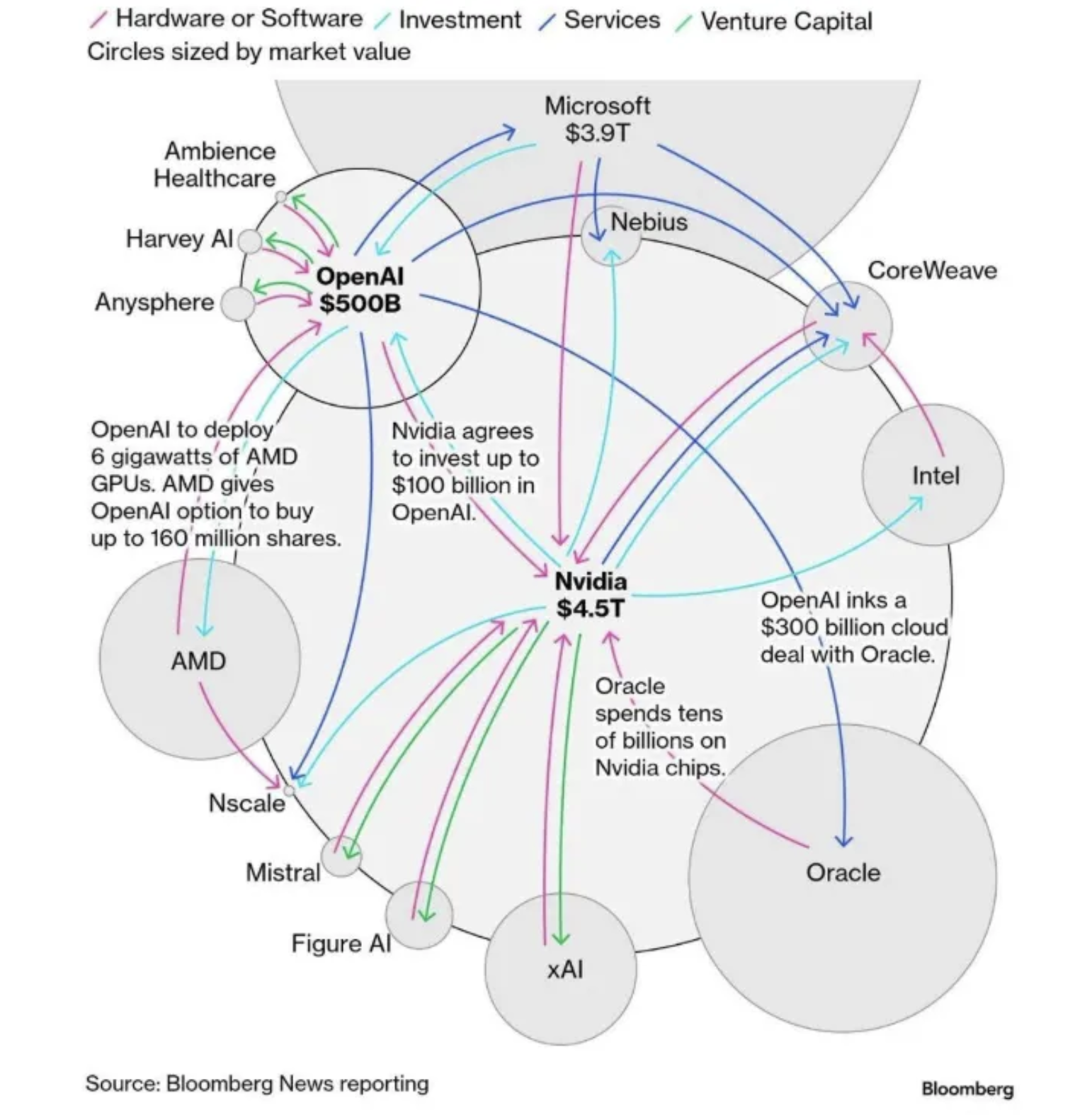

The interconnected web of investments, partnerships, and dependencies in the AI industry is staggering. From NVIDIA's $4.5 trillion market cap to OpenAI's $500 billion valuation, the relationships between these companies—through hardware deals, cloud services, and venture capital—show just how complex and interdependent this ecosystem has become.

5. Application: The Final Frontier (and the Graveyard)

Finally, we reach the layer where you and I live: the applications. This is the "face" of AI, but it's also where the most drama happens.

Most people start here because it's the easiest place to build, but it's also where 99% of startups go to die. Why? Because if your "business" is just a pretty interface on top of someone else's model, you don't really own anything.

However, the real story for the coming year isn't just about big chatbots; it's about SLMs (Small Language Models). Think of it this way: you don't need a PhD-level super-intelligence to help you organise your grocery list or draft a basic email. Using a massive model for a simple task is like using a rocket ship to go to the corner store—it's overkill and it's expensive.

Most smart companies are now moving toward these smaller, "scrappier" models that can run locally on your phone or your laptop. They are faster, they respect your privacy because they don't need to send your data to the cloud, and they are much cheaper. This is where the technology actually becomes "essential"—not when it's a giant brain in a data centre, but when it's a small, helpful tool that lives in your pocket and actually understands your world.

Wrapping Up

Looking back at where we started—back when "AI" was a word that got me weird looks in a coffee shop—it's clear that we aren't just looking at a trend. We are looking at a total rebuild of our world, from the power grid up to the apps on our screens.

Happy New Year, and here's to seeing what layer breaks first in 2026!